The Role of SEO Spider in Website Analysis and Optimisation

Search Engine Optimization (SEO) is a critical component of any successful online presence. It involves various strategies and tools to improve a website’s visibility on search engine results pages. One such tool that plays a crucial role in SEO analysis and optimisation is the SEO spider.

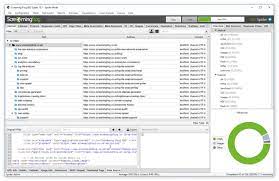

An SEO spider, also known as a web crawler or search engine bot, is a software program used by search engines to scan and index websites. It works by crawling through the different pages of a website, following links, and collecting data about the site’s structure, content, and metadata.

One of the key functions of an SEO spider is to identify technical issues that may affect a website’s performance in search engine rankings. It can uncover broken links, duplicate content, missing meta tags, slow loading pages, and other issues that may hinder a site’s visibility.

Moreover, an SEO spider helps in analysing the internal linking structure of a website. By crawling through the site’s pages and identifying how they are connected through links, it provides valuable insights into how search engines perceive the site’s hierarchy and relevance.

Furthermore, an SEO spider assists in conducting keyword research by analysing the keywords used across a website. It helps in identifying opportunities for improving keyword targeting and ensuring that relevant keywords are strategically placed throughout the site’s content.

In addition to analysis, an SEO spider also plays a crucial role in monitoring changes made to a website over time. By regularly crawling the site and comparing data from previous crawls, it helps in tracking improvements, detecting new issues, and ensuring that SEO efforts are yielding positive results.

In conclusion, an SEO spider is an indispensable tool for website analysis and optimisation. By providing valuable insights into technical issues, internal linking structures, keyword usage, and monitoring changes over time, it helps businesses enhance their online visibility and improve their search engine rankings.

8 Essential Tips for Optimising Your Website for SEO Spiders

- Ensure your website is crawlable by search engine spiders.

- Use a sitemap to help search engine spiders navigate your site more effectively.

- Optimize your URLs with relevant keywords for better spider indexing.

- Check for broken links and fix them to improve spider crawling efficiency.

- Avoid duplicate content as it can confuse search engine spiders and affect rankings.

- Monitor your site’s loading speed as faster sites are preferred by search engine spiders.

- Include meta tags with relevant keywords to provide clear signals to search engine spiders.

- Regularly check for and fix any crawl errors reported in Google Search Console.

Ensure your website is crawlable by search engine spiders.

To maximise the effectiveness of your SEO efforts, it is crucial to ensure that your website is easily crawlable by search engine spiders. This means structuring your site in a way that allows spiders to navigate through its pages efficiently and index relevant content. By optimising your website’s internal linking structure, avoiding broken links, and providing clear sitemaps, you can help search engine spiders discover and index your content effectively. Ensuring crawlability not only improves your site’s visibility in search engine results but also enhances the overall user experience by making it easier for visitors to find the information they are looking for.

Use a sitemap to help search engine spiders navigate your site more effectively.

Utilizing a sitemap is a valuable tip when working with an SEO spider. By creating and submitting a sitemap to search engines, you can assist search engine spiders in navigating your site more efficiently. A sitemap acts as a roadmap of your website, outlining the structure and hierarchy of its pages. This helps search engine spiders to crawl and index your site’s content accurately, ensuring that all important pages are discovered and ranked appropriately. Implementing a sitemap is a proactive step towards improving the visibility and accessibility of your website for search engines, ultimately leading to better SEO performance.

Optimize your URLs with relevant keywords for better spider indexing.

To enhance the effectiveness of SEO spiders in indexing your website, it is crucial to optimise your URLs by incorporating relevant keywords. By including targeted keywords in your URLs, you provide search engine spiders with clear signals about the content and relevance of each page on your site. This practice not only helps improve the indexing process but also enhances the overall visibility of your website in search engine results. Strategic use of keywords in URLs can significantly boost your site’s chances of ranking higher for relevant search queries, making it easier for users to find and navigate your content online.

Check for broken links and fix them to improve spider crawling efficiency.

Checking for broken links and fixing them is a crucial tip when using an SEO spider to enhance website performance. Broken links not only create a poor user experience but also hinder the efficiency of spider crawling. By identifying and rectifying broken links, website owners can ensure that search engine spiders can easily navigate through the site, index its content effectively, and improve overall visibility on search engine results pages. This proactive approach not only enhances the user experience but also boosts the site’s SEO performance, leading to better rankings and increased organic traffic.

Avoid duplicate content as it can confuse search engine spiders and affect rankings.

To optimise your website effectively using an SEO spider, it is crucial to avoid duplicate content. Duplicate content can create confusion for search engine spiders and have a negative impact on your website’s rankings. When search engines encounter identical or very similar content across multiple pages, they may struggle to determine which version is the most relevant to display in search results. This can lead to lower visibility and decreased organic traffic for your site. By ensuring that each page on your website offers unique and valuable content, you can help search engine spiders better understand and index your site, ultimately improving your SEO performance.

Monitor your site’s loading speed as faster sites are preferred by search engine spiders.

Monitoring your site’s loading speed is a crucial tip when using an SEO spider. Search engine spiders favour faster websites, as they provide better user experience and are more likely to be ranked higher in search results. By keeping a close eye on your site’s loading speed and making necessary improvements, you can ensure that search engine spiders can crawl and index your website efficiently, ultimately boosting your chances of achieving better visibility and rankings on search engines.

Include meta tags with relevant keywords to provide clear signals to search engine spiders.

Including meta tags with relevant keywords is a crucial tip when using an SEO spider to optimise a website. Meta tags, such as meta titles and descriptions, provide valuable information about the content of a web page to search engine spiders. By incorporating relevant keywords into these meta tags, website owners can send clear signals to search engine spiders about the focus and relevance of their content. This practice not only helps in improving the visibility of the website in search engine results but also enhances the overall user experience by providing accurate and informative snippets in search engine listings.

Regularly check for and fix any crawl errors reported in Google Search Console.

Regularly checking for and addressing any crawl errors reported in Google Search Console is a crucial tip for effective SEO spider usage. By identifying and fixing crawl errors promptly, website owners can ensure that search engine bots can properly index their site’s content. This proactive approach helps in maintaining a healthy website structure, improving overall visibility on search engine results pages, and enhancing the user experience. Ignoring crawl errors can lead to indexing issues, decreased organic traffic, and potential ranking penalties. Therefore, staying on top of crawl errors through Google Search Console is essential for maintaining a well-optimised website that performs well in search engine rankings.

Leave a Reply